When a Google Search result has an AI Overview, or while in Google AI Mode, the AI-driven response from these depend on how the Gemini family of LLMs interpret intent, verify information, and decide if and which sources to cite.

At Hi, Moose, we’re exploring that process. We’re running experiments, studying how these models behave, and building tools that help SEOs and marketers better understand how these systems make decisions to guide where to take action.

How Google AI Mode Actually Works (At Least, the Part We Can See)

Google has shared some details about how AI Mode and AI Overviews work under the hood.

The model uses two related techniques to build an answer: query fan-out1 and grounding2.

- Query fan-out happens when the system expands a question into smaller, related searches. It issues multiple queries across subtopics and data sources to explore the breadth of a topic. Think of it as the exploration phase.

- Grounding happens when the model runs real Google searches to verify facts and connect its text to verifiable web sources⁴. This is the evidence phase, and it’s also where citations come from.

Over-simplified, but the difference between fan-out vs grounding: Fan-out builds breadth. Grounding builds evidence.

An important part for SEOs is that Google exposes grounding data through the Gemini API3. That gives us a rare look at the searches the model used to verify and support a response.

What We Can and Can't See

We can't see everything. Fan-out queries from Google, happen behind the scenes, Google hasn't made those public.

But grounding queries are visible, and that's one of the areas where SEOs can focus:

Through the Gemini API, Google returns a structured field called groundingMetadata3, which includes:

webSearchQueries— the real Google searches the model ran for verification.groundingChunks— the sources or URLs the model referenced.groundingSupports— how specific parts of the model’s answer link back to those sources.

Together, this gives us one of the first observable layers of how Google Gemini interprets a prompt and what it considers credible enough to cite if/when it determines it needs to ground a response.

SEOs can use this data to compare grounding queries and cited sources against their own content, to identify gaps or missing perspectives, and adjust content or create new content that better match how Google interprets the topic.

More About What Grounding Queries Tell Us

Each grounding query offers a glimpse into how Google’s model frames a topic.

They’re not keyword variants. They’re interpretive pivots that show what the model believes it must validate to confidently build an answer.

For example, a single prompt about "healthy dog treats for Australian Shepherds" might yield grounding queries like:

- "grain-free dog treats for allergies"

- "low-fat snacks for active breeds"

- "homemade natural dog treat recipes"

In this example, those extra searches reveal interpretation; Google sees the topic as being about health, breed, and homemade options.

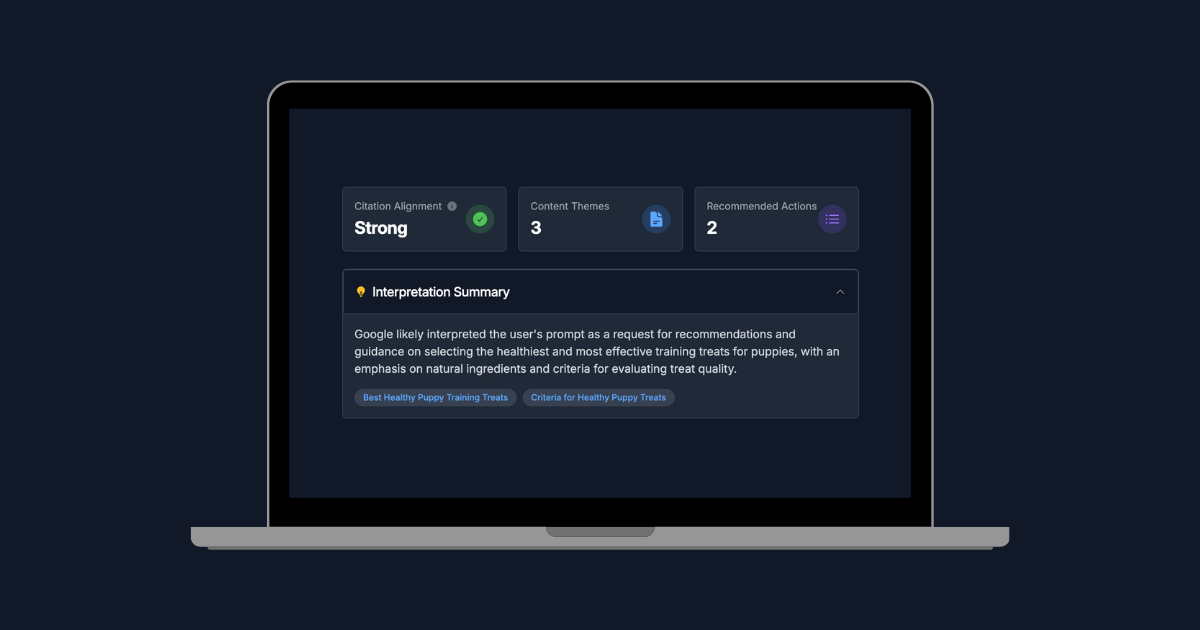

Introducing: Citation Alignment

Grounding data is where Citation Alignment comes in.

What is Citation Alignment?

Citation Alignment is the practice of analyzing and optimizing content using grounding data, the search queries and sources AI systems reference to verify their responses. It focuses on how those systems interpret prompts through grounding queries and aligning your content with that interpretation so it can serve as a credible, citable source.

Citation Alignment is not another version of AEO or GEO, it's part of it. It's a way to work with what we can actually see in AI search behavior. The goal is to understand how your content fits into Google's process of verification and citation and to improve content where it makes sense.

How Hi, Moose Captures and Compares Grounding Queries

When you run a Citation Alignment check in Hi, Moose, the following happens:

- The system calls the Gemini API to capture the grounding queries Google AI Mode issued for your prompt⁵. In most cases, Google returns grounding queries, but not always. When the model has a high probability that its answer is already correct based on internal knowledge, it may skip external grounding altogether.

- It scrapes your page content.

- It uses AI to summarize what those grounding queries reveal about Google’s interpretation.

- It compares that interpretation to your content, showing where you align strongly, partially, or weakly.

- It tracks interpretation drift, which highlights when Google’s grounding queries change over time.

The Citation Alignment tool within Hi, Moose makes it more convenient to run these steps and maintain a history.

Citation Alignment Use Cases

Grounding queries are some of the closest real-world evidence we have of how AI systems verify their responses. They show what Google’s AI believes is necessary to support an answer, the bridge between query, verification, and citation.

For SEOs and marketers, this creates a new kind of visibility work:

- We can see how Google frames topics at a sub-intent level.

- We can find gaps in our content where we can expand to better align with what Google uses for citation.

- We can track how those interpretations shift over time, revealing changes in topical emphasis or intent, and the emergence of new, authoritative sources (your brand).

We're not suggesting to write or rewrite content for every possible angle. It’s about understanding why AI systems trust certain content and adjusting or building for that if you know it makes sense for your target reader.

Other Models May Behave Differently

Everything in this article refers to Google AI Overviews and AI Mode (powered by Gemini), which publicly returns structured groundingMetadata, including the actual search queries, retrieved sources, and how each is cited in the response.

Perplexity provides visible citations that show which sources were referenced in an answer, but it does not expose the underlying grounding queries or retrieval logic behind them. Its multi-stage retrieval process is proprietary and not accessible through the public APIs (as of Oct 2025). We'll dive into more about Perplexity data that SEOs can use in a later post.

ChatGPT Search also surfaces citations within responses, but it does not expose the model’s grounding or fan-out behavior. Unofficial third-party browser extensions do exist to capture fan-out queries (we see those making the rounds on LinkedIn and X).

At the time of writing, Gemini is the only widely used AI system known to return structured grounding data through an official API, allowing developers/SEOs to inspect the searches in addition to citations behind its answers.

Where We Go Next

This work is still early, and every week we uncover new questions (and new confusions) about how AI systems interpret and cite content. We’ll keep experimenting, reading, collaborating, and learning across everything happening in AI and search.

One thing we are not doing is promoting black-hat tactics for short-term gains, that would be irresponsible and dumb for us to build tooling around. We want to understand these systems better and when it makes sense, build tooling around activity that support long-term SEO and AEO strategies.

It's an exciting, nerve-wrecking, and often exhausting time to be in SEO. We've chosen to embrace the changes, be critical when needed, and give credit when deserved. We hope to be a part of this space for a long time to come.

Want to see how we're putting all this into practice? Try Hi, Moose and start experimenting with Citation Alignment yourself.

Sources

- Google Blog: A Deeper Search with AI Mode

https://blog.google/products/search/google-search-ai-mode-update/#deep-search - Google Developers: AI Overviews & AI Mode

https://developers.google.com/search/docs/appearance/ai-features - Gemini API Docs: Grounding with Google Search

https://ai.google.dev/gemini-api/docs/google-search - Vertex AI Docs: Grounding Overview

https://cloud.google.com/vertex-ai/generative-ai/docs/grounding/overview - Google ADK Docs: Google Search Grounding

https://google.github.io/adk-docs/grounding/google_search_grounding/ - Effective Large Language Model Adaptation for Improved Grounding and Citation Generation

https://arxiv.org/pdf/2311.09533